Scope of the task

I’ve recently started to touch on various on-going approaches to artificial general intelligence. I think the field of AI has started to reach an inflection point where we can start to have these sorts of discussions with some degree of credibility. While I recently came out in support of the legendary programmer John Carmack as a sort of dark horse candidate to get to the grail of AGI first, he is no means the only one in the race.

Those that have studied reinforcement learning for any length of time almost certainly know the name Richard Sutton. He’s one of the OGs of research in the field, and at the end of 2022 he released a roadmap for an AGI, dubbed the Alberta plan. As one might expect, there’s a lot of substance there, so I want to take it one step at a time and start discussing what he proposes for the essential components for the generally intelligent agent.

In a paper titled “The Quest for a Common Model of the Intelligent Decision Maker”, Sutton lays out what he has observed to be common aspects of the intelligent agent, as researched across a variety of disciplines. Research from neuroscience to economics has something to say about the intelligent decision maker, and indeed it’s reasonable to think each field has some insights that can contribute to the development of AI. Far from being an academic exercise, developing a common model to unite the work in these disparate fields is a strong first step in cooperative interdisciplinary research that will likely be necessary for the development of AGI.

Some immediate challenges

One of the downsides of such an interdisciplinary approach is that researchers tend to come with their own preconceived notions, jargon, and mental frameworks. If we’re to truly reach across the aisle and work with scientists from a broad variety of fields, we are going to have to settle on some sort of terminology that doesn’t place emphasis on the jargon from any one field.

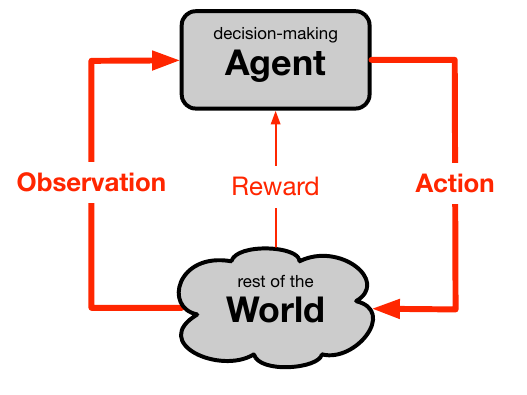

As an example, in reinforcement learning we speak of the agent, which takes actions on its environment and receives observations and rewards in return. In psychology, we would refer to the organism, responses and stimulus. In control theory, we would talk about the controller, control signals, and the state of the plant. Each of these terms conveys very similar meanings, but have a contextual component that carries with it a certain set of assumptions. These assumptions are valid in their own domain, but may not be necessary in the more generalized intelligent agent.

Sutton settles on referring to the essential components as the agent, world, observations rewards and actions. He mostly settles on the jargon of reinforcement learning, with the exception of swapping out “world” for “environment”.

If we take a step back here and look at what Sutton is proposing, we can see that he is trying to get to the essence of the common principles from each discipline. He’s not simply throwing all of the concepts and principles from each discipline into a blender to see what comes out. In the paper, he aptly describes this as taking the intersection rather than the union of these fields, and this approach can be applied to the content of the research as well as the terminology.

So our definition of what constitutes an observation should be very broad. We don’t want to constrain ourselves to requiring that an observation be visual in nature, as it would be for biology, or even a series of vector observations as in the case of control theory. The observation is merely the information the agent takes in from the environment to make its decisions.

This sort of generalized thinking of decision making is a relatively new approach. There really isn’t much research out there around the generalized concept of decision making outside of the context of biological organisms or engineering systems. Sutton opines that perhaps one day such a generalized model of decision making will one day become an independent field of study, and I agree that this would probably be the most fruitful field of study, from a pure research perspective.

The goal of the decision maker

In reinforcement learning we’re most familiar with the agent receiving some reward signal from the environment. This is a scalar signal, meaning just a single number with no directional component, and is outside of the agent’s direct control. Meaning, the agent can’t simply dispense rewards to itself for taking no action.

The agent’s goal, at least in the way we formulate it using reinforcement learning, is the maximization of the cumulative sum of this reward over time. Certainly, we can discount the rewards at each time step to more or less greatly weight recent rewards, but the central idea is the same: get as large of a reward signal from the environment as possible. This notion even works in the case where the agent receives a penalty at each time step: it will simply seek to minimize this penalty. Oftentimes, this is done solving the task in as few steps as possible.

This raises the interesting question around goal states in the world. Why don’t we simply define some state we want to reach and then compare the state of the world to that goal state at each time step? This is actually feasible in some situations, but doesn’t quite work in the generalized case. Suppose we want to deploy an intelligent agent to control a power plant. How could we create a goal to maintain some state? This isn’t immediately obvious without some sort of additive reward.

How do we build the decision making agent?

The question of how do we distill an agent into a few core components that transcend the many fields of study is actually quite difficult. It’s practically guaranteed that we’re not going to capture the essence of every field, still we can do our best to get close enough. Sutton attempts to do that, and I think largely does a good job, though with the disclaimer that I’m not an economist or neuroscientist.

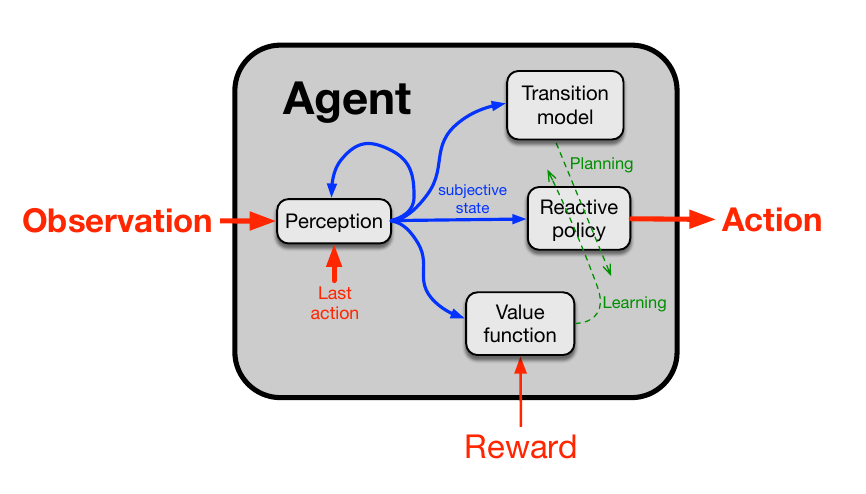

Sutton boils it down to four essential components. Perception, the transition model, the reactive policy, and the value function.

Perception deals with processing a sequence of observations into the internal subjective state for our agent. For instance, when we’re implementing deep Q learning in the Atari library, we can take the previous 4 frames from the environment, downsize and grayscale them and then stack them. This stack of heavily modified screen images constitute the subjective experience of the agent. It’s clear that they map to the true external state of the world, but they are not identical to it.

Another example might be a chessboard, when viewed through the eyes of a grand master. The GM can see beyond the mere arrangement of the pieces and into the nuances of the potential future moves. There may be threats looming a couple moves ahead as well as opportunities, that aren’t actually represented in the state of the world. Rather, they exist as a product of the agent’s perception of the current state of the world.

More generally, our most important requirements are that the subjective state is quickly computed and that we don’t have to revisit the entire history of the world to construct the current subjective state. We can simply take the previous subjective state, current observation and action taken to get our most recent state for the agent.

The next critical component is the reactive policy. It maps the agent’s subjective state to the optimal action. In reinforcement learning, this takes the form of the epsilon-greedy action selection algorithm or even direct policy approximation a deep neural network. It’s tightly linked to the perception component, and similar to that it must be fast. It does no good if the agent takes more time to perceive and decide what to do than there is time between successive time steps in the world.

Of course, an agent that is seeking to maximize total reward over time is going to need to develop some sense of how valuable actions are, for a given subjective state. This is where the value function comes in. These appear in many disciplines outside of AI research, and as such it makes a lot of sense to incorporate it into the agent. For instance, in economics these are called utility functions and can play a role in consumer preferences.

Naturally, value functions play a large role in reinforcement learning. The value function is often approximated a neural network, often called a critic. It can take the observation or both the observation and action as input; either way the role is to determine the value of a state or state-action pair.

The last piece of the puzzle is the transition model. This is the agent’s representation of the dynamics of the world. Given some state and action, what will the resulting state be? This is critical in formulating an effective reactive policy as well as a robust perception of the world. By learning how actions affect the world, the agent can learn which actions to take for a given subjective state to maximize its total rewards over time.

Critically, the transition model doesn’t require that the agent actually take any action at all. It can merely predict outcomes based on hypothetical actions. This process is actually what is called reason or planning, and from our own observations of our mind, it’s obviously critical to decision making at a high level.

Wrapping it up

Sutton set out to form a common model of an intelligent agent that goes beyond any one field and can be used in as many fields as possible. In this, I think he largely succeeded. He acknowledges it leaves out many components that one might expect, such as curiosity, exploration, and motivation. The reason for their absence is that these features do not prominently play into a broad array of fields, like say control theory.

This is merely a starting point, rather than the destination. In a later post we’re going to talk about Sutton’s work on subtasks and later on, theAlberta plan for artificial general intelligence. Both of these will build upon the work here.